Given an unsorted array return whether an increasing subsequence of length 3 exists or not in the array.

Formally the function should:

Return true if there exists i, j, k

such that arr[i] < arr[j] < arr[k] given 0 ≤ i < j < k ≤ n-1 else return false.

Your algorithm should run in O(n) time complexity and O(1) space complexity.

Examples:

Given [1, 2, 3, 4, 5],

return true.

Given [5, 4, 3, 2, 1],

return false.

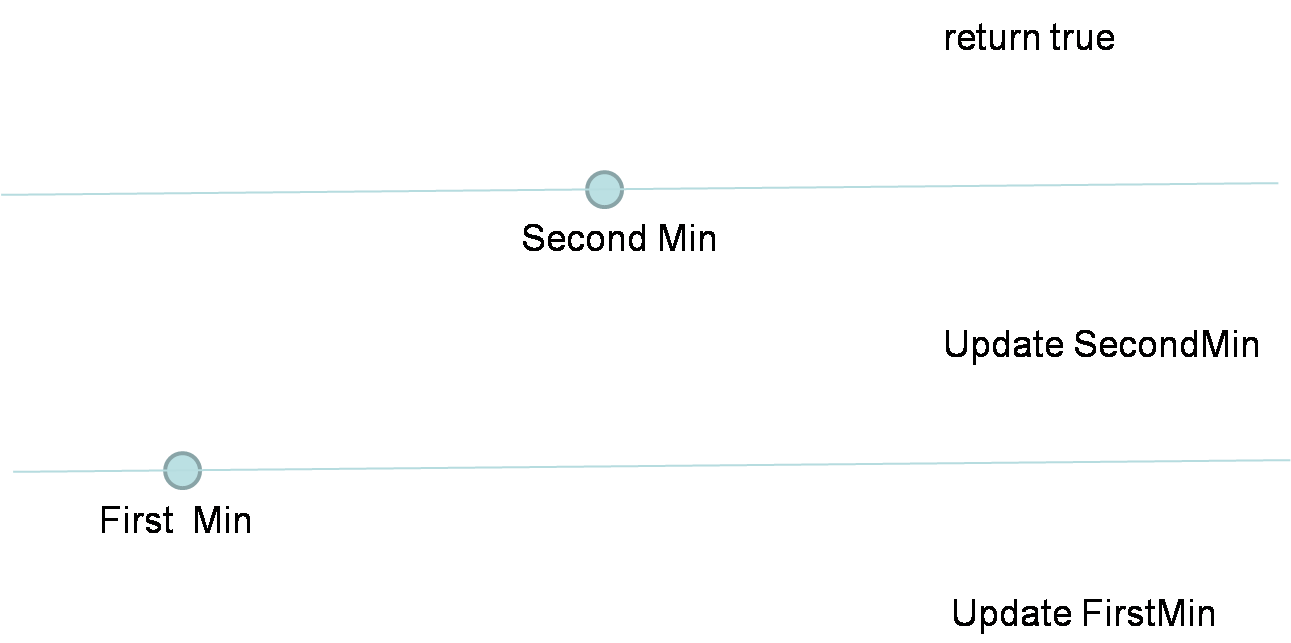

Solution. The idea is to define firstMin and secondMin. If any element nums[i] is greater than secondMin, then return true. Because firstMin < secondMin < nums[i]. If not, then we update firstMin and secondMin accordingly.

I came up with a solution passing all test. But it is not as clean as the solution from this post.

Check my code on github: link